Last time, I discussed why you as a developer might be interested in PowerShell and gave you some commands to start playing with. I said we’d cover re-usable scripts, but I’m going to delay that until next post as I want to talk more about life in the shell…

PowerShell feels a lot like cmd.exe, but with a lot more flexibility and power. If you’re an old Unix hack like me, you’ll appreciate the ability to combine (aka pipe) commands together to do more complex operations. Even more powerful than Unix command shells is the fact that rather than inputting/outputting strings as Unix shells do, PowerShell inputs and outputs objects. Let me prove it to you…

- At a PowerShell prompt, run “get-process” to get a list of running processes. (Remember that PowerShell uses single nouns for consistency.)

- Use an array indexer to get the first process: “(get-process)[0]” (The parentheses tell PowerShell to run the command.)

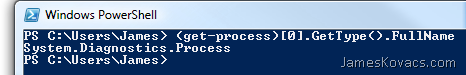

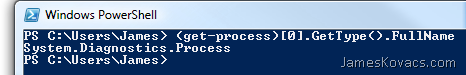

- Now let’s get really crazy… “(get-process)[0].GetType().FullName”

As a .NET developer, you should recognize “.GetType().FullName”. You’re getting the class object (aka System.Type) for the object returned by (get-process)[0] and then asking it for its type name. What does this command return?

That’s right! The PowerShell command, get-process, returns an array of System.Diagnostics.Process objects. So anything you can do to a Process object, you can do in PowerShell. To figure out what else we can do with a Process object, you can look up your MSDN docs or just ask PowerShell itself.

get-member –inputObject (get-process)[0]

Out comes a long list of methods, properties, script properties, and more. Methods and properties are the ones defined on the .NET object. Script properties, alias properties, property sets, etc. are defined as object extensions by PowerShell to make common .NET objects friendlier for scripting.

Let’s try something more complex and find all processes using more than 200MB of memory:

get-process | where { $_.PrivateMemorySize –gt 200*1024*1024 }

Wow. We’ve got a lot to talk about. The pipe (|) takes the objects output from get-process and provides them as the input for the next command, where – which is an alias for Where-Object. Where requires a scriptblock denoted by {}, which is PowerShell’s name for a lambda function (aka anonymous delegate). The where command evaluates each object with the scriptblock and passes along any objects that return true. $_ indicates the current object. So we’re just looking at Process.PrivateMemorySize for each process and seeing if it is greater than 200 MB.

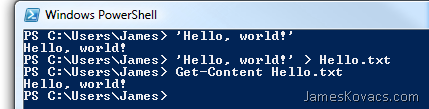

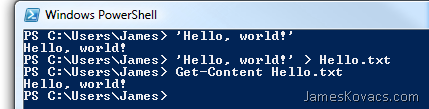

Now why does PowerShell use –gt, –lt, –eq, etc. for comparison rather than >, <, ==, etc.? The reason is that for decades shells have been using > and < for input/output redirection. Let’s write to the console:

‘Hello, world!’

Rather than writing to the console, we can redirect the output to a file like this:

‘Hello, world!’ > Hello.txt

You’ll notice that a file is created called Hello.txt. We can read the contents using Get-Content (or its alias, type).

get-content Hello.txt

Since > and < already have a well-established use in the shell world, the PowerShell team had to come up with another syntax for comparison operators. They turned to Unix once again and the test command. The same operators that have been used by the Unix test command for 30 years are the same ones as used by PowerShell.*

So helpful tidbits about piping and redirection…

- Use pipe (|) to pass objects returned by one command as input to the next command.

- ls | where { $_.Name.StartsWith(‘S’) }

- Use output redirection (>) to redirect the console (aka stdout) to a file. (N.B. This overwrites the destination file. You can use >> to append to the destination file instead.)

- Do not use input redirection (<) as it is not implemented in PowerShell v1.

So there you have it. We can now manipulate objects returned by PowerShell commands just like any old .NET object, hook commands together with pipes, and redirect output to files. Happy scripting!

* From Windows PowerShell in Action by Bruce Payette p101. This is a great book for anyone experimenting with PowerShell. It has lots of useful examples and tricks of the PowerShell trade. Highly recommended.

Last night I gave a presentation on psake and PowerShell to the Virtual ALT.NET (VAN) group. I had a fun time demonstrating how to write a psake build script, examining some psake internals, discussing the current state of the project, and generally making a fool of myself by showing how much of a PowerShell noob I really am. I believe that the presentation was recorded and will be posted online in the next few days. Then you too can see me fumbling around trying to remember PowerShell syntax. I consider myself a professional developer when it comes to many areas, but in terms of PowerShell I am a hack who learns just enough to get the job done.

Last night I gave a presentation on psake and PowerShell to the Virtual ALT.NET (VAN) group. I had a fun time demonstrating how to write a psake build script, examining some psake internals, discussing the current state of the project, and generally making a fool of myself by showing how much of a PowerShell noob I really am. I believe that the presentation was recorded and will be posted online in the next few days. Then you too can see me fumbling around trying to remember PowerShell syntax. I consider myself a professional developer when it comes to many areas, but in terms of PowerShell I am a hack who learns just enough to get the job done.

Unfortunately I’m going to have to postpone

Unfortunately I’m going to have to postpone