[Code for this article is available on GitHub here.]

One of the new features in NHibernate 3 is the addition of a fluent API for configuring NHibernate through code. Fluent NHibernate has provided a fluent configuration API for awhile, but now we have an option built into NHibernate itself. (Personally I prefer the new Loquacious API to Fluent NHibernate’s configuration API as I find Loquacious more discoverable. Given that Fluent NHibernate is built on top of NHibernate, you can always use Loquacious with Fluent NHibernate too. N.B. I still really like Fluent NHibernate’s ClassMap<T>, automapping capabilities, and PersistenceSpecification<T>. So don’t take my preference regarding fluent configuration as a denouncement of Fluent NHibernate.)

The fluent configuration API built into NHibernate is called Loquacious configuration and exists as a set of extensions methods on NHibernate.Cfg.Configuration. You can access these extension methods by importing in the NHibernate.Cfg.Loquacious namespace.

var cfg = new Configuration();

cfg.Proxy(p => p.ProxyFactoryFactory<ProxyFactoryFactory>())

.DataBaseIntegration(db => {

db.ConnectionStringName = "scratch";

db.Dialect<MsSql2008Dialect>();

db.BatchSize = 500;

})

.AddAssembly(typeof(Blog).Assembly)

.SessionFactory().GenerateStatistics();

On the second line, we configure the ProxyFactoryFactory, which is responsible for generating the proxies needed for lazy loading. The ProxyFactoryFactory type parameter (stuff between the <>) is in the NHibernate.ByteCode.Castle namespace. (I have a reference to the NHibernate.ByteCode.Castle assembly too.) So we’re using Castle to generate our proxies. We could also use LinFu or Spring.

Setting db.ConnectionStringName causes NHibernate to read the connection string from the <connectionStrings/> config section of the [App|Web].config. This keeps your connection strings in an easily managed location without being baked into your code. You can perform the same trick in XML-based configuration by using the connection.connection_string_name property instead of the more commonly used connection.connection_string.

Configuring BatchSize turns on update batching in databases, which support it. (Support is limited to SqlClient and OracleDataClient currently and relies on features of these drivers.) Updating batching allows NHibernate to group together multiple, related INSERT, UPDATE, or DELETE statements in a single round-trip to the database. This setting isn’t strictly necessary, but can give you a nice performance boost with DML statements. The value of 500 represents the maximum number of DML statements in one batch. The choice of 500 is arbitrary and should be tuned for your application.

The assembly that we are adding is the one that contains our hbm.xml files as embedded resources. This allows NHibernate to find and parse our mapping metadata. If you have your metadata located in multiple files, you can call cfg.AddAssembly() multiple times.

The last call, cfg.SessionFactory().GenerateStatistics(), causes NHibernate to output additional information about entities, collections, connections, transactions, sessions, second-level cache, and more. Although not required, it does provide additional useful information about NHibernate’s performance.

Notice that there is no need to call cfg.Configure(). cfg.Configure() is used to read in configuration values from [App|Web].config (from the hibernate-configuration config section) or from hibernate.cfg.xml. If we’ve not using XML configuration, cfg.Configure() is not required.

Loquacious and XML-based configuration are not mutually exclusive. We can combine the two techniques to allow overrides or provide default values – it all comes down to the order of the Loquacious configuration code and the call to cfg.Configure().

var cfg = new Configuration(); cfg.Configure(); cfg.Proxy(p => p.ProxyFactoryFactory<ProxyFactoryFactory>()) .SessionFactory().GenerateStatistics();

Note the cfg.Configure() on the second line. We read in the standard XML-based configuration and then force the use of a particular ProxyFactoryFactory and generation of statistics via Loquacious configuration.

If instead we make the call to cfg.Configure() after the Loquacious configuration, the Loquacious configuration provides default values, but we can override any and all values using XML-based configuration.

var cfg = new Configuration();

cfg.Proxy(p => p.ProxyFactoryFactory<ProxyFactoryFactory>())

.DataBaseIntegration(db => {

db.ConnectionStringName = "scratch";

db.Dialect<MsSql2008Dialect>();

db.BatchSize = 500;

})

.AddAssembly(typeof(Blog).Assembly)

.SessionFactory().GenerateStatistics();

cfg.Configure();

You can always mix and match the techniques by doing some Loquacious configuration before and som after the call to cfg.Configure().

WARNING: If you call cfg.Configure(), you need to have <hibernate-configuration/> in your [App|Web].config or a hibernate.cfg.xml file. If you don’t, you’ll throw a HibernateConfigException. They can contain an empty root element, but it needs to be there. Another option would be to check whether File.Exists(‘hibernate.cfg.xml’) before calling cfg.Configure().

So there you have it. The new Loquacious configuration API in NHibernate 3. This introduction was not meant as a definitive reference, but as a jumping off point. I would recommend that you explore other extension methods in the NHibernate.Cfg.Loquacious namespace as they provide the means to configure the 2nd-leve cache, current session context, custom LINQ functions, and more. Anything you can do in XML-based configuration can now be accomplished with Loquacious or the existing methods on NHibernate.Cfg.Configuration. So get out there and start coding – XML is now optional…

Thanks to everyone who came out to my session on Convention-over-Configuration on the Web at

Thanks to everyone who came out to my session on Convention-over-Configuration on the Web at

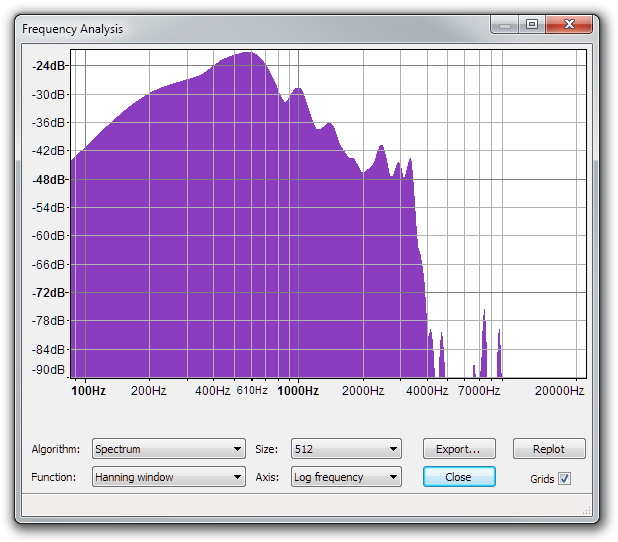

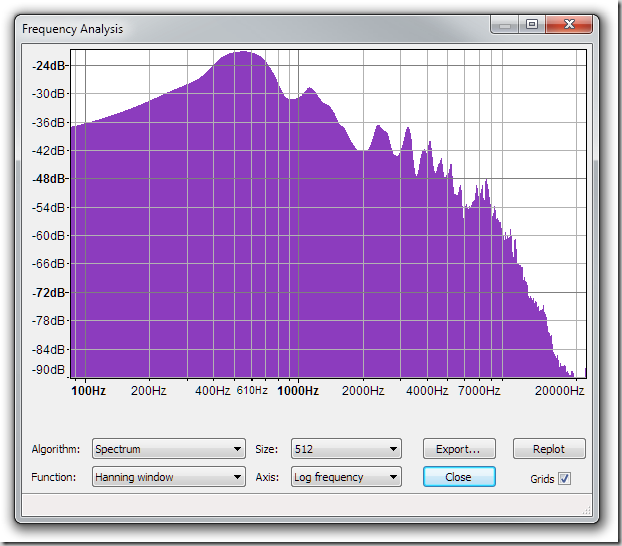

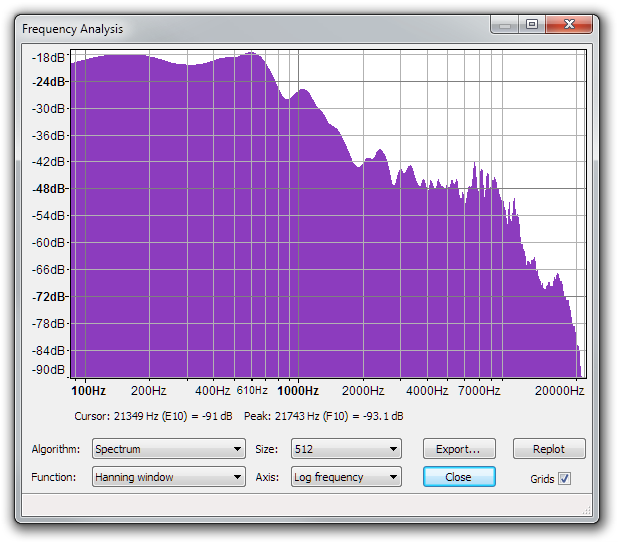

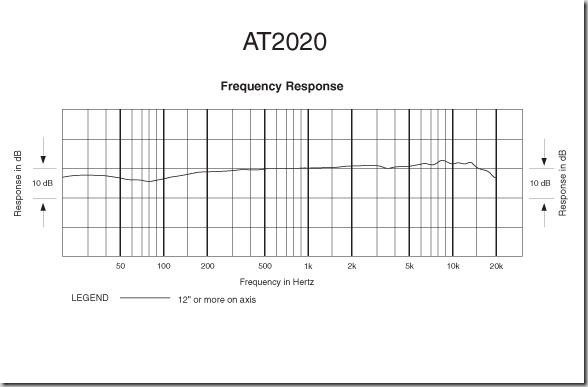

[audio:wp-content/uploads/LifeChatZX-6000.ogg|wp-content/uploads/LifeChatZX-6000.mp3]

[audio:wp-content/uploads/LifeChatZX-6000.ogg|wp-content/uploads/LifeChatZX-6000.mp3]

[audio:wp-content/uploads/LifeChatLX-3000.ogg|wp-content/uploads/LifeChatLX-3000.mp3]

[audio:wp-content/uploads/LifeChatLX-3000.ogg|wp-content/uploads/LifeChatLX-3000.mp3]

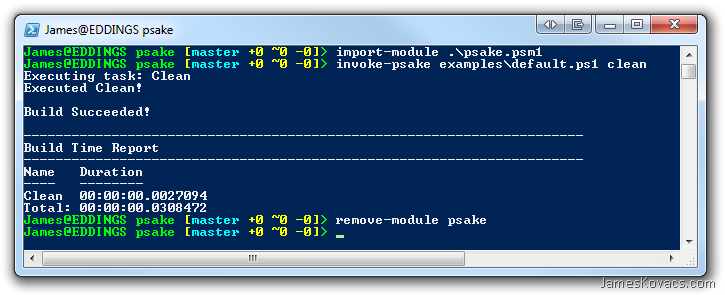

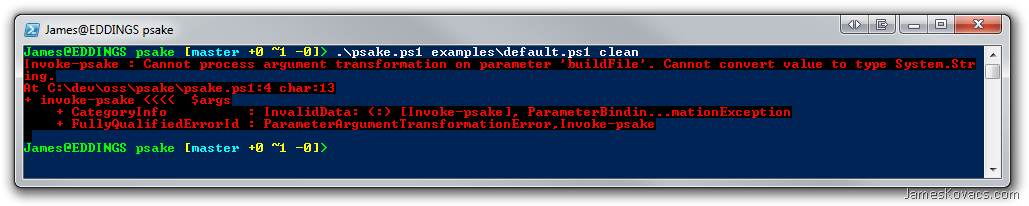

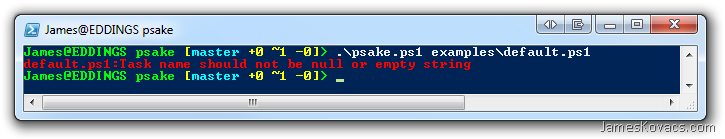

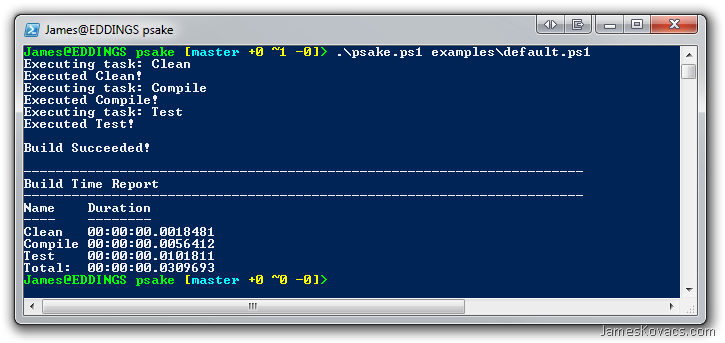

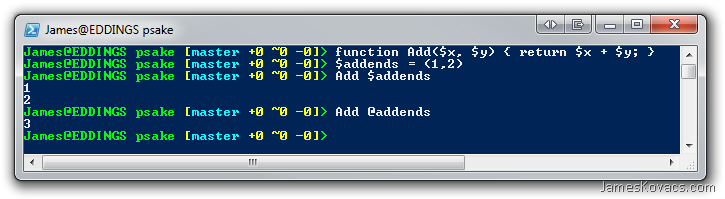

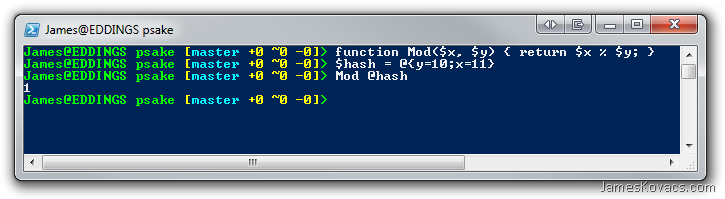

It is with great pleasure that I announce psake v4.00, which you can download

It is with great pleasure that I announce psake v4.00, which you can download  No, this post is not a tribute to the fabulously kitschy

No, this post is not a tribute to the fabulously kitschy

Another year, another

Another year, another

NHibernate Dojo

NHibernate Dojo jQuery Dojo

jQuery Dojo In addition to my two dojos and sessions by many other speakers, my friend, Donald “IglooCoder” Belcham will be giving a post-con on “Making the Most of Brownfield Application Development”. If you’ve got a legacy codebase that needs taming – and who doesn’t? – this is a great post-con to check out.

In addition to my two dojos and sessions by many other speakers, my friend, Donald “IglooCoder” Belcham will be giving a post-con on “Making the Most of Brownfield Application Development”. If you’ve got a legacy codebase that needs taming – and who doesn’t? – this is a great post-con to check out.